Forging a Path Forward: Japan’s Universities Face Challenging Future

Global University Rankings: How Are They Measured?

Science Technology Society Culture- English

- 日本語

- 简体字

- 繁體字

- Français

- Español

- العربية

- Русский

I was shocked by an article that appeared in the morning edition of the Nikkei (Nihon Keizai Shimbun) on November 2, 2015. It reported that the government was introducing numerical targets to measure the results of its Fifth Science and Technology Basic Plan, which will form the core of its science and technology policies for the coming five years (fiscal 2016–20), and that one of the targets to be adopted will be the global rankings of Japanese universities.

On December 10, the Council for Science, Technology, and Innovation revealed its proposed draft of the new basic plan. The draft did not refer explicitly to global university rankings as a target, but it did mention “international comparison of universities.” The meaning of this term is not clear, but if it refers to global rankings, I fear that the selection of this target will have unfortunate consequences.

The first time global university rankings were adopted as a quantitative target for public policy was in the Japan Revitalization Strategy announced in June 2013. This strategy, drawn up by the Industrial Competitiveness Council, included as one of its targets to have “at least 10 [Japanese universities] among the top 100 in the global ranking of universities.” It was on this basis that global university rankings came to be incorporated as targets in Japan’s national plans, programs, policies, and measures for universities.

Rankings That Favor Bigger Universities

The three most widely known global rankings of universities are the Academic Ranking of World Universities started by Shanghai Jiaotong University in 2003, the World University Rankings produced by Times Higher Education since 2004, and the QS World University Rankings produced by Quacquarelli Symonds since 2010.(*1) In my opinion, however, the ARWU, also known as the Shanghai Ranking, is best not used as a reference. I have a number of reasons for this opinion, but the most serious fault is that for 90% of the component factors, the scores of otherwise identically performing universities are proportional to their size. This produces strange results: If, for example, the seven of Japan’s national universities that were formerly imperial universities were merged into a single institution, they would probably lead the world according to the ARWU. The THE and QS rankings also dissatisfy me in that they use “reputation”—which is linked to size— as the largest single factor, though the degree of their bias toward bigness is less than that of the ARWU.(*2)

THE and QS use similar methodology for their rankings, and their results tend to be similar. But they do show some differences: In 2015, for example, QS ranked five Japanese universities among its top 100, but THE included only two in its top 100. And we can observe some peculiar phenomena, such as in the assessment of Tokyo Institute of Technology (where I work), which rose to 56th place in the 2015 QS ranking but fell sharply to the 201–250 group in the THE ranking for the same year.

Though they use similar methodologies, the two prepare their rankings based on their own distinct perspectives, so it is only natural for their results to differ. Actually, rankings of all sorts—not just those of universities—and assessments in general are affected by the viewpoints of those who make them. That may be said to be a common feature of the assessment process. Even so, seeing widely divergent results like those for Tokyo Tech leaves me with a lingering sense of doubt about these rankings.

The Impact of Reputation on Japanese Universities’ Rankings

Table 1 below presents the indicators (considerations) and weightings that THE and QS use as the basis for their rankings. The two organizations use somewhat different indicators, but both place the greatest weight on the results of surveys of universities’ reputations, followed by scores for the number of citations of the research papers they produce.(*3)

Table 1. Ranking Indicators and Weights

THE

| Category | Indicator | Weight |

|---|---|---|

| Teaching | Reputation survey | 15% |

| Staff-to-student ratio | 4.5% | |

| Doctorate-to-bachelor’s ratio | 2.25% | |

| Doctorates awarded-to-academic staff ratio | 6% | |

| Institutional income | 2.25% | |

| Research | Reputation survey | 18% |

| Research income | 6% | |

| Research productivity | 6% | |

| Citations | Citations per paper | 30% |

| International outlook | International-to-domestic-student ratio | 2.5% |

| International-to-domestic-staff ratio | 2.5% | |

| International collaboration | 2.5% | |

| Industry income | Income from industry/academic staff | 2.5% |

QS

| Indicator | Weight |

|---|---|

| Academic reputation | 40% |

| Employer reputation | 10% |

| Student-to-faculty ratio | 20% |

| Citations per faculty | 20% |

| International faculty ratio | 5% |

| International student ratio | 5% |

Sources: https://www.timeshighereducation.com/news/ranking-methodology-2016 (THE) and http://www.topuniversities.com/university-rankings-articles/world-university-rankings/qs-world-university-rankings-methodology (QS).

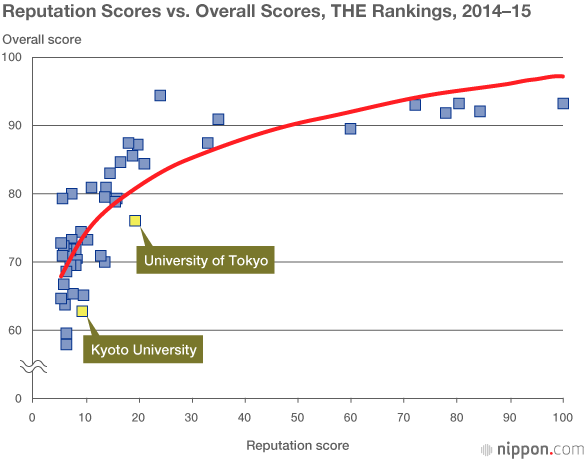

Each spring THE also releases a set of rankings based solely on reputation. Some people in Japan have the idea that reputation rankings are disadvantageous to Japanese universities. This appears to be a misconception at least for the University of Tokyo and Kyoto University. In the chart below I have plotted the results for 50 universities from the 2014–15 THE rankings, with reputation scores as the x-axis and overall scores as the y-axis. The curve is the result of logarithmic regression. Universities placing above and to the left of the curve have reputation scores that are low in comparison with their overall scores, while those below and to the right of the curve have reputation scores that are high in comparison with their overall scores. As highlighted, the University of Tokyo and Kyoto University are both in the latter category. In other words, the reputation rankings actually work to their advantage.

New Methodology Makes Many Japanese Universities Rank Lower

In the 2015 rankings from both THE and QS, major shifts were seen in the results for Japanese universities. THE in particular showed dramatic declines, with the University of Tokyo dropping from 23 to 43, Kyoto University from 59 to 88, Tokyo Tech from 141 to the 201–250 group, Osaka University from 157 to the 251–300 group, and Tōhoku University from 165 to the 201–250 group. Considerable declines were also seen in the QS rankings for Japanese universities, with the exception of Tokyo Tech and Waseda University, whose rankings rose. These shifts were due in large part to changes in the methodology used for citations, which are second in weighting only to reputation as an indicator.

In the QS rankings, a normalized total citation count was introduced in 2015 in order to take account of differences in average citation rates for each faculty area. This meant a decrease of the impact from the life sciences and medicine and an increase of the impact from engineering and technology. These changes seem to be responsible for the ranking shifts noted above.

THE also recalibrated its methodology: Previously it normalized citation data within countries to allow for the impact of nation-by-nation conditions, such as language and culture, on the numbers of citations.(*4) But in 2015 it reduced the level of this adjustment by half. This resulted in sharply lower scores for Japanese universities, whose citation figures are lower than the global average. Various changes were made in other indicators as well, and I have not yet managed to grasp their total impact.

From the above explanation I believe it is evident that the global rankings of universities, which are subject to big shifts based on the thinking of the organizations that produce them, are not something to fret over, nor are they appropriate for adoption as numerical targets. However, I think we should be concerned over the current state of Japan’s universities, as partially reflected in their international rankings.

Major Asian Institutes Quickly Gaining Ground

In the autumn of 2015 I compiled data from the Scopus citation database for papers that were cited over 1,000 times, looking at four Asian universities.(*5) The results are presented in Table 2 below.

Table 2. Numbers of Papers Cited Over 1,000 Times

| Published 2000–2014 | Published 2008–14 | |

|---|---|---|

| University of Tokyo | 74 | 19 |

| Kyoto University | 47 | 9 |

| Peking University | 14 | 8 |

| National University of Singapore | 31 | 16 |

Note: As I tabulated the figures by visual inspection of the university names in the data, there may be omissions resulting from inconsistencies and errors in the notation of the names.

For papers published in the extended period from 2000 through 2014, the University of Tokyo and Kyoto University hold substantial leads over the other two Asian universities. But the more recent results for 2008–14 suggest the possibility that these other Asian universities are catching up. This reflects the strong backing they are receiving from their respective governments. Japan’s universities should receive the same sort of support.

Though the global rankings of universities have their shortcomings, the figures and related data that underlie them supply signals that we should seriously consider.

(Originally written in Japanese and published on January 19, 2016. Banner photo: Yasuda Auditorium, University of Tokyo (left), and Clock Tower Centennial Hall, Kyoto University.)

(*1) ^ Until 2009, Quacquarelli Symonds cooperated with Times Higher Education in producing the latter’s annual rankings, but since 2010 it has released its own rankings.

(*2) ^ For example, the fact that the California Institute of Technology, while placing first in THE’s rankings for 2015–16, does not score very high in reputation may be seen as a result of its relatively small size.

(*3) ^ THE and QS use different approaches to normalization of citation data, and these differences result in widely divergent rankings of Japanese universities.

(*4) ^ Unfortunately there is no clear explanation of the normalization method used.

(*5) ^ In measuring citations, it is common to use percentile rankings rather than raw numbers, but papers with more than 1,000 citations may be seen as exceptionally excellent. And universities that produce large numbers of such papers are likely to be conducting field-leading research. However, various factors are at work, and so this should be taken just as a rough measure.

University of Tokyo Kyoto University global university rankings Shanghai Jiaotong University Quacquarelli Symonds Japanese university ranking Times Higher Education