Communication and the Japanese Language

“Seaman” Virtual Pet Creator Working on Chat-Capable AI

Science Technology- English

- 日本語

- 简体字

- 繁體字

- Français

- Español

- العربية

- Русский

Not Much of a Conversationalist

The humanoid robot Pepper was unveiled on June 5, 2014, winning initial popularity in part for its promise of boosting high street sales. When I visited a familiar store to check how its Pepper was getting on, however, there was no sign of it in its usual place. One of the assistants told me, “Recently nobody talks to it, and it’s not working properly, so we don’t put it out.” I got the same kind of comment when I called another outlet. Finally, at the third store, I found a Pepper.

Its chest display said that it was on duty, and it looked eager to get working, but it was true that nobody paid it any notice. Feeling sorry for it, I struck up a conversation.

Me: Hello.

Pepper: Hello.

Me: Are you on duty?

Pepper: I can’t understand you. How about a little practice?

I went through a timing and diction drill.

Pepper: Please ask me a question, like what music or films I like.

Me: What music do you like?

Pepper: I like “Sekai ni hitotsu dake no hana” [the SMAP megahit].

Me: You like SMAP?

Pepper: I don’t understand that. Is it OK if I speak first?

Apparently Pepper had never heard of SMAP.

Cute assistants like Pepper and Sharp’s combination robot/smartphone Robohon are known for being able to recognize human speech to hold simple conversations. As described above, however, a natural chat does not come easily, and talking with them requires a certain knack.

What is the situation in other countries? After Amazon produced the world’s first smart speaker, the Echo, in 2014, it soon faced competition from Google Home, Apple Homepod, and Microsoft Invoke, as the IT giants successively threw their hats in the ring. In Japan, there is the Clova Wave speaker marketed by the messaging giant Line, as well as local-language versions of Google Home and Amazon Echo.

Each has its own built-in virtual assistant: Amazon’s Alexa, Google Assistant, Apple’s Siri, Microsoft’s Cortana, and Clova. This last one, Line’s assistant, was developed to respond to both Japanese and Korean. No doubt, many readers have encountered these assistants in their smartphones or computers and have asked them for a weather update, used them to get information on local restaurants and shops, or instructed them to call or text a friend.

Smart speaker virtual assistants can read the news, play music, and tell jokes if required. If more assistants are installed in automotive systems, televisions, and air conditioning units, it will be possible to control those by voice as well. Amazon Echo is estimated to have sold 11 million units as of the end of 2016. The US survey firm eMarketer found that in May 2017, Amazon had a 70% share of the country’s market and that 35.7 million Americans used smart speakers at least once a month.

Pioneering Voice Recognition in a Virtual Pet Game

Now that the world is getting ready to switch from keyboards to voice recognition, there is a need for a Japanese-speaking AI conversation engine. Saitō “Yoot” Yutaka, creator of the bestselling Seaman virtual pet series, is among those working on the challenge. In 2015, he established the Seaman Artificial Intelligence Research Center.

“I’m getting on now, so I’ve thought about retiring. But it’s been eighteen years since I first made Seaman and I’ve still got all the knowledge from working on the later versions. I’m the only person who can build a Japanese conversation engine.”

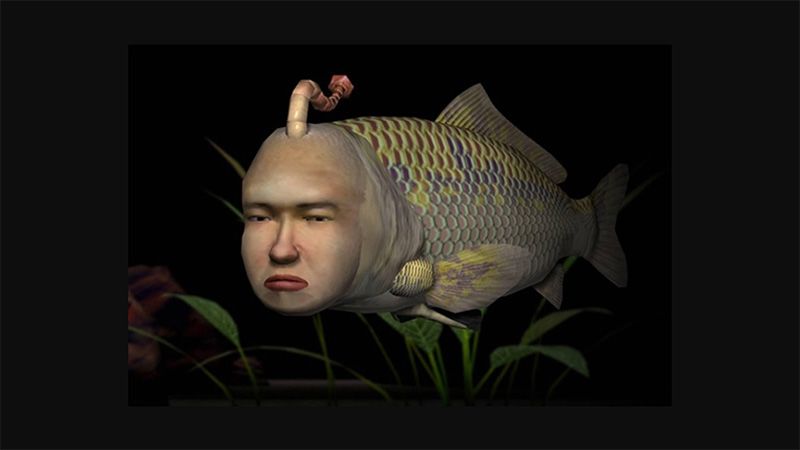

Released in 1999, Seaman was one of the first games to use voice recognition. The titular creature has a fish body and a human head. As players raise it through various stages in its life cycle, the Seaman responds and reacts to what they say to it. At the time, however, voice recognition was quite primitive, so it often did not understand. As a last resort measure, Saitō designed it to get angry and criticize the player’s pronunciation when this happened. This successfully covered up the flaw and the arrogance of the Seaman character became part of the game’s appeal.

Seaman (© 1998–2017 OpenBook Inc.)

Seaman (© 1998–2017 OpenBook Inc.)

With a little inventiveness, Seaman managed to give the superficial impression of natural conversation. The development team put together scenarios imagining what the player would say and preparing appropriate replies for Seaman. The total number of scenarios were said to be enough to fill 20 telephone directories, and Saitō recorded all of Seaman’s lines himself. These scenarios represent the knowledge that he has built up.

“Amazon Echo just answers individual questions. These kinds of products take the same approach as I did with Seaman—it’s not artificial intelligence at all. We’re still only halfway to a conversation engine that knows what someone talking to it actually wants to say. AI can currently deal with requests like ‘Buy me some tickets,’ but we’re working on an AI that can make broader responses, so if someone says, ‘I got 100%,’ it will reply, ‘That’s great. That’s the second time, right?’”

Tearing Down Japanese Grammar

The first stage for Saitō was discarding current ideas and redefining Japanese grammar. For example, with the verb taberu (to eat), the negative imperative taberu na is not the only way to tell someone not to consume something. Saitō gives the following examples of increasingly forceful orders.

食べるなよ taberu na yo (Don’t eat it.)

食べるなって言ってんだろ taberu na tte itten daro (I told you not to eat it.)

食べたらぶっ殺す tabetara bukkorosu (I’ll kill you if you eat it.)

Japanese also often does not have a clearly stated subject, such as “I” or “you.” However, even if the pronoun itself is missing, the verb form can make it clear—something that AI needs to be able to pick up on.

While Saitō was working on the voice for Seaman and recording the plethora of scenarios, he noticed issues to do with pitch in Japanese.

“Seaman couldn’t tell the difference between the rising pitch of the kurabu [club] where you go dancing and the falling pitch of the kurabu where you do activities after school. There’s a difference too between saying taberu and asking if someone wants to eat with a rising tone, taberu?” Saitō explains how pitch has its own rules, giving the example of a father asking his daughter who she wants to marry. When she says “Takashi,” he suggests the father’s dismayed response “Takashi?” could be assigned a value of 2, say, to denote his level of disagreement. This grammar, which is not to be found in textbooks, is required for accurate voice recognition.

Saitō has his eyes—and ears—on the next stage of intelligent machine interaction.

Saitō has his eyes—and ears—on the next stage of intelligent machine interaction.

By redefining grammar, assigning numerical values to it, and incorporating these into a program, Saitō believed he would be able to teach an AI Japanese.

“A year and a half after positing that what is currently considered Japanese grammar does not reflect the reality, I am recording the conjugations of this new grammar every day and forming them into a system with my staff. I ran my theory past Professor Uchida Satoru at Kyūshū University’s Faculty of Languages and Cultures, and he was greatly impressed. Now he’s part of the project.”

Despite the praise, Saitō felt some discouragement from his conversation with Uchida.

“As a game creator, I find it fundamentally fascinating to tear down existing structures and build new ones on top. Once I broke gaming conventions with my new games and now I’m destroying grammar to make a new version. So, having someone understand my idea without getting angry—or even to say that it’s already been suggested—was a little disappointing, in a way. I don’t think the elite, who were raised on textbooks, could make this kind of weird AI. Building a road to a fresh destination is a job for someone like me in the entertainment business.”

Protecting Japan’s AI Industry

Will the local-language Amazon Echo capture the Japanese market?

“I see myself in the volunteer corps fighting to protect Japan’s AI industry from foreign forces. It’s obvious that native Japanese speakers should make a Japanese conversation engine. And if we succeed, it should be easy enough to make English and other language versions.”

I have tried the Japanese version of Amazon Echo. It may have been a problem with how I spoke to the machine, but I felt that it would have been quicker to search for the weather or turn the music on myself than to make myself understood using the speaker.

It seems that we are still in the early days of voice recognition. If an AI is developed that—as Saitō hopes—can do more than respond to simple requests, how will that change everyday life?

Saitō “Yoot” Yutaka

Chief executive officer of OpenBook Inc., director of the Seaman Artificial Intelligence Research Center, and designer of games including SimTower, Seaman, Ōdama, and Aero Porter. Has also written books, such as Hanbāgā o matsu 3 pun no nedan (The Price of Waiting Three Minutes for a Hamburger), Makkintosshu densetsu (The Legend of Macintosh), and Ringo no ki no shita de: Appuru wa ika ni shite Nihon ni jōriku shita no ka (Beneath the Apple Tree: How Apple Broke into the Japanese Market).

(Originally published in Japanese on February 13, 2018. Text by Kuwahara Rika of Power News. Photographs by Imamura Takuma unless otherwise stated. Banner photo: Seaman creator and AI researcher Saitō Yutaka.)